Topline Summary

AI can generate realistic-looking fake people, Stable Diffusion makes that easier than ever, and I’ve deployed a model you can try yourself with 93% out-of-sample accuracy in detecting whether the person was generated by Stable Diffusion.

AI Can Generate Realistic Looking Faces

thispersondoesnotexist.com blew everyone away when it first launched in 2019. The website would generate a brand new,1 entirely fabricated person each time you refreshed.

The site used GAN neural networks, and provided code templates for generating your own fake people. Some folks pointed out at the time that providing these code templates could have negative consequences.

Negative Side Effects Abound

For example, a network of bots using GAN-generated pictures boosted tweets by a pro-secessionist candidate for lieutenant governor in Texas. And that’s far from the only swarm of bots that looked more like real people by using GAN generated faces.

While these GAN-generated faces look realistic, they have some telltale visual cues that can help distinguish them from genuine photos. For example, GAN-generated faces tend to render the eyes in the exact same position. So while they might be tricky to spot, we’ve gotten better at screening for them.2

Enter Stable Diffusion.

The Potential for Negative Side Effects Only Increases with Stable Diffusion

In order to create a lot of faces using a GAN, you had to either manually refresh thispersondoesnotexist.com a bunch of times or know how to code. Generating realistic looking faces using Stable Diffusion is much simpler.

If you haven’t heard of Stable Diffusion, it’s a AI model that can convert text prompts like “A cute penguin in front of a giant stack of pancakes, shot on iPhone” into an image like this.

You can also write other kinds of prompts3 that generate realistic looking people. There are few, if any, telltale visual cues that the images were generated by Stable Diffusion.

This person does not exist

Neither does this person

And they don’t either.

I Built and Deployed a Model to Detect Whether People Were Generated by Stable Diffusion

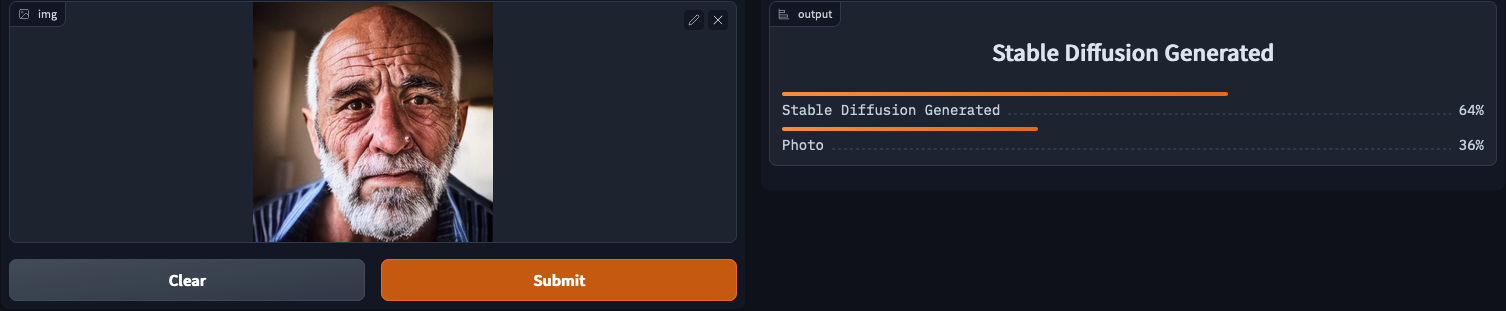

You can find the deployed model here. It predicts all of the example images correctly,4 and the model has 93% out-of-sample accuracy with an out-of-sample F1 score of 0.88.

You can test the model with any real or Stable-Diffusion generated images you’d like using the deployed model. Please send interesting model successes + failures to me on Twitter or Mastodon!

This is an initial proof-of-concept, so please don’t use this as part of any production products. If you’re interested in building on this idea I’d love to hear from you!

How Did You Build This Model?

I had a lot of fun building this model using Python, PyTorch, and the fastai wrapper. I got to do a lot of interesting work with APIs, loops, and query optimization.

In huge contrast to most of my projects, I’m not going to publicly share my code. I don’t want to provide a direct blueprint for how to programmatically generate a lot of realistic-looking fake people. I’m kind of bummed, because I think it’s some of the best Python programming I’ve done. And I still think it’s the right thing to do.

I understand that folks have a variety of opinions about this, and I think the potential harms outweigh any potential benefits.5

If you want to get a sense of how I generally approached my model-building, I’d recommend you check out Practical Deep Learning for Coders.

Conclusion

I think Stable Diffusion is a powerful resource, and I think we need to think carefully about how to wield that power. Building models that can help detect whether an image was generated by Stable Diffusion is not a silver bullet answer. I think pervasive believable yet false imagery is a problem that far exceeds any one technical solution.

And as we grapple with the proliferation of models like Stable Diffusion, having some technical tools like this model in our toolbelt feels like a good bet.

Footnotes

Or almost brand new, if you refresh the site enough you definitely get repeats↩︎

Though newer generations of GAN-generated faces will likely make them even harder to detect, see https://nvlabs.github.io/stylegan3/↩︎

Which I won’t be sharing here, more on that later!↩︎

Huge shout out to HuggingFace spaces + Gradio for making such a great interface for push-button model deployments↩︎

Especially since we know people have used open-source fake people generators for ill already, the risk isn’t hypothetical!↩︎